Computational Cognition Lab

Github Google Scholar contact@cclab.ac

Research Strands

COMPUTATIONAL EXPLANATIONS

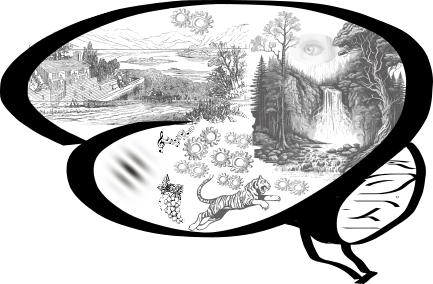

Computational models are being increasingly used to understand the mechanisms that underlie human cognition. But to what extent are model-based explanations complete? How can we construct models from the perspective of an agent acting in a complex world and adjusting their behaviour from moment to moment? How can we detect these subtle adjustments in an agent's "cognitive state space"?

VISUAL REPRESENTATIONS IN DEEP NETWORKS

How do Deep Neural Networks (DNNs) represent objects and scenes? How do these representations compare to those generated by the human visual system? What can we learn about human visual representations based on differences between DNNs and humans?

THE COST OF THINKING

Humans possess a meta-level control over their thought processes and frequently choose which thoughts to engage with and what cognitive computations to perform. How do we weigh up the mental energy required by various cognitive computations? Do we also consider how long these computations will take and their opportunity costs? What are the advantages of this control in solving reinforcement learning problems?

COMPLEXITY AND DECISION-MAKING

How do we learn to make decisions in a complex and dynamic world, where we compete with other individuals for resources? How do we balance the need for accuracy with the need for speed? How do we adapt our decision-making strategies to the constraints imposed by our cognitive resources?

ALIGNMENT BETWEEN AIs and BRAIN

How can we compare human cognitive representations with those of Deep Neural Networks? The last few years have seen a proliferation of methods, from encoding models to representation similarity analyses. Do these methods provide a good metric for comparing the mechanism used by the two systems? Can we design methods that allow us to understand how human representations align with models?

To get more information on some recent work, check out the Publications page.